Transform data

A data platform typically involves people in various roles working together, each contributing in different ways. Some individuals will be more involved in certain areas than others. For example, with dbt, analysts may focus primarily on modeling the data, but still want their models integrated into the overall pipeline.

In this step, we will incorporate a dbt project to model the data we loaded with DuckDB.

1. Add the dbt project

First we will need a dbt project to work with. Run the following to add a dbt project to the root of the etl_tutorial:

git clone --depth=1 https://github.com/dagster-io/jaffle-platform.git transform && rm -rf transform/.git

There will now be a directory transform within the root of the project containing our dbt project.

.

├── pyproject.toml

├── src

├── tests

├── transform # dbt project

└── uv.lock

This dbt project already contains models that work with the raw data we brought in previously and requires no modifications.

2. Scaffold a dbt component definition

Now that we have a dbt project to work with, we need to install both the Dagster dbt integration and the dbt adapter for DuckDB:

- uv

- pip

Install the required dependencies:

uv add dagster-dbt dbt-duckdb

Install the required dependencies:

pip install dagster-dbt dbt-duckdb

We still want our dbt project to be represented as assets in our graph, but we will define them in a slightly different way. In the previous step, we manually defined our assets by writing functions decorated with the @dg.asset. This time, we will use components, which are predefined ways to interact with common integrations or patterns. In this case, we will use the dbt component to quickly turn our dbt project into assets.

The dbt component was installed when we installed the dagster-dbt library. This means we can now scaffold a dbt component definition with dg scaffold defs command:

dg scaffold defs dagster_dbt.DbtProjectComponent transform --project-path transform/jdbt

Creating a component at <YOUR PATH>/src/etl_tutorial/defs/transform.

This will look similar to scaffolding assets, though also include the --project-path flag to set the directory of our dbt project. After the dg command runs, the directory transform is added to the etl_tutorial module:

src

└── etl_tutorial

└── defs

└── transform

└── defs.yaml

3. Configure the dbt defs.yaml

The dbt component creates a single file, defs.yaml, which configures the Dagster dbt component definition. Unlike the assets file, which was in Python, components provide a low-code interface in YAML. Most of the YAML file’s contents were generated when we scaffolded the component definition with dg and provided the path to the dbt project which is set in the project attribute under attributes:

type: dagster_dbt.DbtProjectComponent

attributes:

project: '{{ project_root }}/transform/jdbt'

To check that Dagster can load the dbt component definition correctly in the top-level Definitions object, run dg check again:

dg check defs

All component YAML validated successfully.

All definitions loaded successfully.

The component is correctly configured for our dbt project, but we need to make one addition to the YAML file:

type: dagster_dbt.DbtProjectComponent

attributes:

project: '{{ project_root }}/transform/jdbt'

translation:

key: "target/main/{{ node.name }}"

Adding in the translation attribute aligns the keys of our dbt models with the assets we defined previously. Associating them together ensures the proper lineage across our assets.

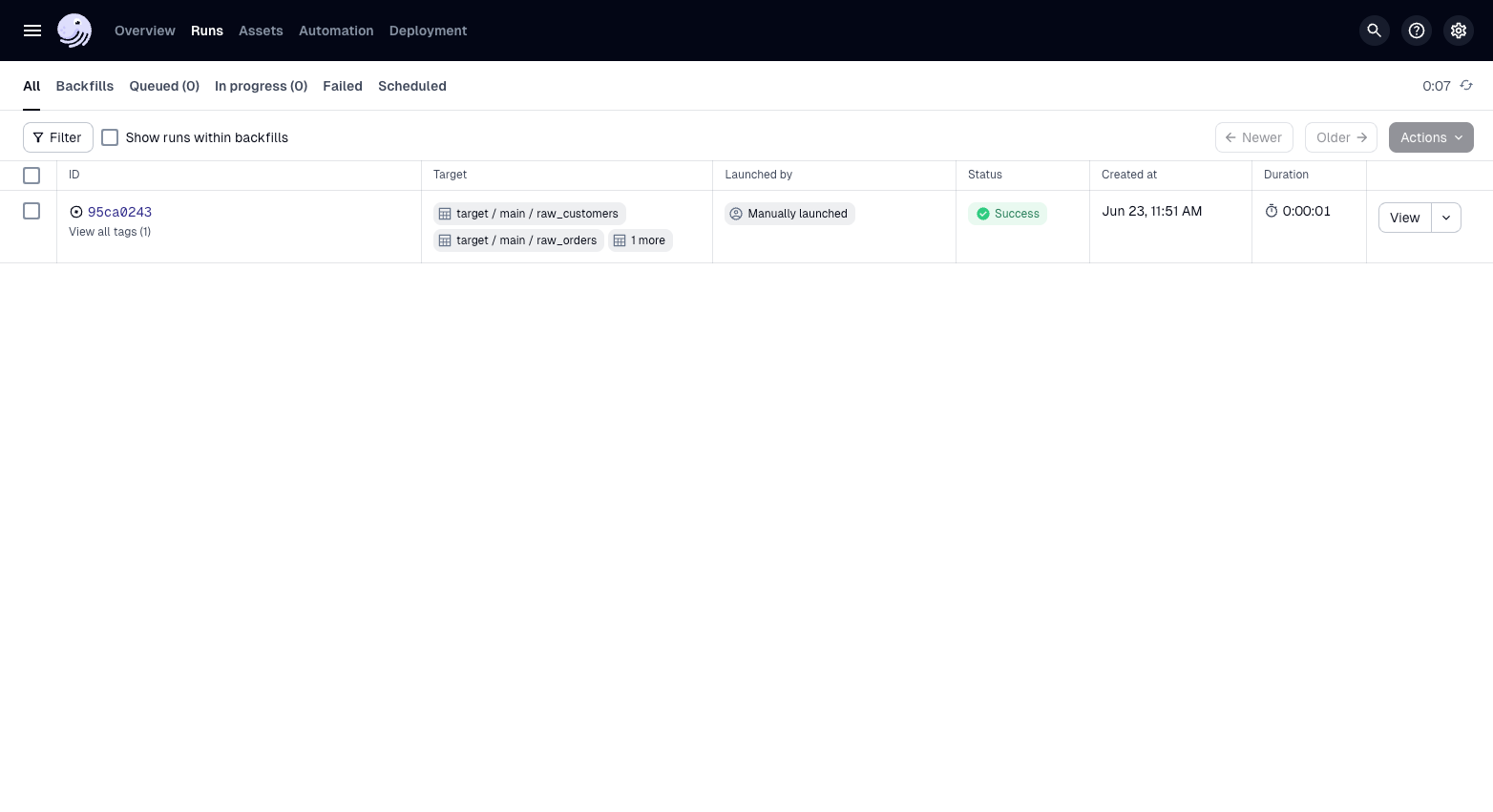

4. Materialize assets

To materialize the assets:

- Click Assets, then click "View global asset lineage" to see all of your assets.

- Click Materialize all.

You can also materialize your assets from the command line with the dg launch command.

Summary

We have now layered dbt into the project. The etl_tutorial module should look like this:

src

└── etl_tutorial

├── __init__.py

├── definitions.py

└── defs

├── __init__.py

├── assets.py

└── transform

└── defs.yaml

Regardless of how your assets are added to your project, they will all appear together within the asset graph.

You might be wondering about the relationship between components and definitions. At a high level, a component builds a definition for a specific purpose.

Components are objects that programmatically build assets and other Dagster objects. They accept specific parameters and use them to build the actual definitions you need. In the case of DbtProjectComponent, this would be the dbt project path and the definitions it creates are the assets for each dbt model.

Definitions are objects that combine metadata about an entity with a Python function that defines how it behaves -- for example, when we used the @asset decorator on the functions for our DuckDB ingestion. This tells Dagster both what the asset is and how to materialize it.

Next steps

In the next step, we will add a DuckDB resource to our project to more efficiently manage our database connections.