Dagster ETL pipeline

In this tutorial, you'll build a full ETL pipeline with Dagster that:

- Ingests data into DuckDB

- Transforms data into reports with dbt

- Runs scheduled reports automatically

- Generates one-time reports on demand

- Visualizes the data with Evidence

Prerequisites

To follow the steps in this guide, you'll need:

- Python 3.10+ and

uvinstalled. For more information, see the Installation guide. - Familiarity with Python and SQL.

- A basic understanding of data pipelines and the extract, transform, and load (ETL) process.

Step 1: Set up your Dagster environment

- uv

- pip

-

Open your terminal and scaffold a new Dagster project:

uvx -U create-dagster project etl-tutorial -

Respond

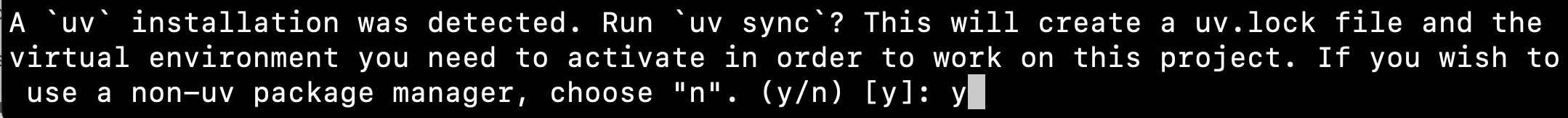

yto the prompt to runuv syncafter scaffolding

-

Change to the

etl-tutorialdirectory:cd etl-tutorial -

Activate the virtual environment:

- MacOS/Unix

- Windows

source .venv/bin/activate.venv\Scripts\activate

-

Open your terminal and scaffold a new Dagster project:

create-dagster project etl-tutorial -

Change to the

etl-tutorialdirectory:cd etl-tutorial -

Create and activate a virtual environment:

- MacOS/Unix

- Windows

python -m venv .venvsource .venv/bin/activatepython -m venv .venv.venv\Scripts\activate

Step 2: Launch the Dagster webserver

To make sure Dagster and its dependencies were installed correctly, navigate to the project root directory and start the Dagster webserver:

dg dev

Next steps

- Continue this example with extract data